- Product & Design Pulse

- Posts

- Product & Design Pulse v79

Product & Design Pulse v79

IP Battles Heat Up Everywhere 🔥

Welcome to this week’s edition of Product & Design Pulse, where we explore the latest in tech, product, design, and innovation! Last week was about who controls what — and at what cost. Across the industry, the fight for ownership intensified on every front: Disney and Paramount fired legal volleys at ByteDance's Seedance 2.0 for treating Hollywood IP like open-source clip art, the EU moved to pry open WhatsApp as a distribution channel for rival AI assistants, and a bipartisan Senate bill landed that would force every AI company to disclose exactly which copyrighted works power their models. Meanwhile, OpenAI's accelerating commercialization came into sharper focus as it rolled out ads in ChatGPT, quietly disbanded its mission alignment team, and saw a researcher resign with a public warning that the company is repeating Facebook's mistakes — all while its hardware ambitions hit branding and timeline setbacks that push its first device to 2027. The through-line is unmistakable: as AI embeds deeper into infrastructure, creative economies, and consumer trust, the companies building fastest are being forced to answer harder questions about what they owe — to users, to creators, to the grid, and to the governance structures they keep reorganizing out of existence.

🎧 Audio Overview [BETA]

For those who don’t have time to read 😁 |

Last week…

Spotify's Best Engineers Now Supervise Code — They Don't Write It

Spotify co-CEO Gustav Söderström revealed during the company's Q4 earnings call that its most senior developers haven't manually written a line of code since December, instead using an internal system called "Honk" powered by Claude Code to generate and deploy features entirely through Slack. The claim positions Spotify as one of the first major consumer tech companies to publicly frame AI-generated code as the default mode of engineering, with 50+ features shipped in 2025 under this workflow. If this model scales across the industry, the role of the software engineer shifts permanently from builder to orchestrator — raising urgent questions about code quality, security exposure, and whether "AI velocity" becomes an excuse for headcount reduction.

Disney Fires Legal Shot at ByteDance Over Seedance's IP Free-for-All

Disney sent a cease-and-desist letter to ByteDance after its newly launched Seedance 2.0 AI video generator produced content featuring Marvel, Star Wars, and Family Guy characters with no apparent IP safeguards, calling it a "virtual smash-and-grab." The action — quickly followed by Paramount — highlights how Chinese AI video models are launching without the licensing frameworks that U.S. companies like OpenAI (which signed a $1 billion Disney deal for Sora) have negotiated. The episode sharpens the emerging divide in generative AI: companies that license IP will gain distribution advantages, while those that don't will face escalating legal and regulatory risk that could reshape the competitive map for AI-generated media.

Anthropic Pledges to Cover Consumer Energy Costs From Its Data Centers

Anthropic committed to covering 100% of grid infrastructure upgrade costs and demand-driven electricity price increases caused by its data centers, including investing in net-new power generation and curtailment systems to reduce peak grid strain. The move preempts what is becoming a significant political liability for the AI industry: the risk that ratepayers subsidize the compute buildout while hyperscalers capture the value. As AI infrastructure demands approach 50+ gigawatts nationally, voluntary cost-absorption commitments like this may become table stakes for securing permitting and community support — and a meaningful differentiator as regulatory scrutiny of AI's energy footprint intensifies.

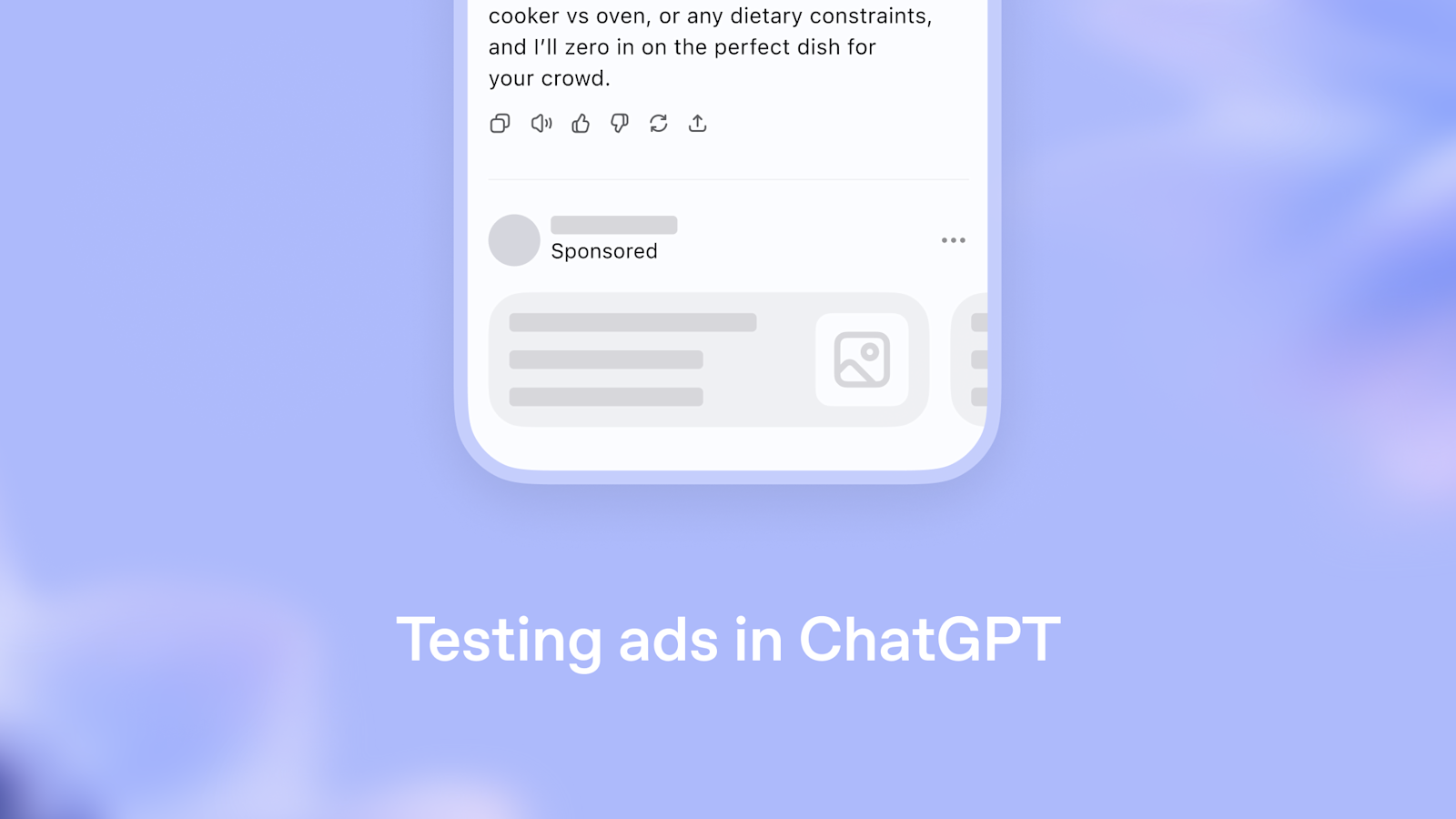

A Former OpenAI Researcher Warns ChatGPT Ads Are the Facebook Playbook All Over Again

OpenAI researcher Zoë Hitzig resigned on the day ChatGPT began testing ads for Free and Go tier users, publishing a New York Times essay arguing that advertising built on the most intimate archive of human thought ever assembled creates manipulation risks that existing frameworks cannot address. OpenAI insists ads won't influence ChatGPT's answers and that user data stays private from advertisers, but the company has enabled ad personalization by default — matching ads to conversation topics, chat history, and prior interactions. With an IPO targeting Q4 2026 and a $1 trillion valuation aspiration, the incentive structure now points toward ever-deeper monetization of user data, making the gap between OpenAI's stated safeguards and its economic trajectory the central trust question for the consumer AI market.

OpenAI Quietly Disbanded Its Mission Alignment Team

OpenAI dissolved its mission alignment team in recent weeks and reassigned its seven members to other teams, with former leader Joshua Achiam taking a new title as "chief futurist." The team was created in 2024 to promote OpenAI's stated mission of ensuring AGI benefits humanity, launched the same day then-CTO Mira Murati unexpectedly departed. The dissolution continues a pattern of safety and alignment infrastructure being reorganized or deprioritized at OpenAI — a signal that institutional commitment to mission governance is struggling to survive the company's accelerating commercialization.

Bipartisan CLEAR Act Would Force AI Companies to Disclose Copyrighted Training Data

Senators Adam Schiff and John Curtis introduced the CLEAR Act, which would require AI companies to file detailed notices with the U.S. Copyright Office listing every copyrighted work used in training datasets — both for new models and retroactively for those already on the market. The bill stops short of mandating licensing, but creates a public database and imposes civil penalties up to $2.5 million for non-disclosure, with backing from SAG-AFTRA, the WGA, and the Authors Guild (though notably not the MPA). By establishing transparency as the regulatory beachhead rather than licensing, the legislation creates a disclosure infrastructure that could become the foundation for future compensation frameworks — and immediately increases legal exposure for any AI company that has been opaque about its training data.

Autodesk Sues Google Over "Flow" Trademark in the AI Filmmaking Space

Autodesk filed a trademark infringement suit against Google in San Francisco federal court, alleging Google launched an AI filmmaking tool under the "Flow" name in May 2025 despite Autodesk having used that trademark since 2022 — and after Google reportedly assured Autodesk it would not commercialize the name. The complaint alleges Google filed a trademark application in Tonga, where filings are not public, to lay groundwork for U.S. protection while marketing Flow at events including Sundance. The case underscores how the rush to claim AI-powered creative tooling is generating trademark collisions that mirror the broader platform land-grab — and how a $3.9 trillion company can overwhelm a $51 billion competitor's brand position through sheer market presence.

OpenAI Drops "io" Branding and Pushes Hardware Launch to 2027

OpenAI confirmed in a court filing that it will not use the "io" name for its AI hardware devices, abandoning the brand inherited from its $6.5 billion acquisition of Jony Ive's startup after a trademark lawsuit from audio device maker iyO. The filing also revealed that the first device — described as a screenless desk companion designed to work alongside a phone and laptop — won't ship to customers before late February 2027, roughly six months later than previously indicated. The combined branding retreat and timeline slip expose how difficult the software-to-hardware transition remains: even with Ive's design pedigree and billions in capital, OpenAI faces the trademark, manufacturing, and go-to-market complexities that have humbled every recent AI hardware entrant.

EU Moves to Force Meta to Reopen WhatsApp to Rival AI Assistants

The European Commission sent Meta a Statement of Objections and signaled it intends to impose interim measures requiring the company to reverse its January 2026 policy blocking third-party AI assistants from WhatsApp, which effectively made Meta AI the sole AI chatbot available to the platform's 3 billion users. The Commission's preliminary view is that Meta breached EU antitrust rules by leveraging WhatsApp's dominance to give its own AI assistant an unfair advantage in the rapidly growing market for conversational AI. If interim measures are imposed, the case would set a significant precedent — establishing that dominant messaging platforms cannot function as walled gardens for AI distribution, and reinforcing the EU's position that antitrust enforcement must move at the speed of AI market formation.

🗓️ Upcoming Events

📱 Product & Feature Highlights

v1.116 is rolling out now! For all the overthinkers and perfectionists out there, we're launching Drafts.

— Bluesky (@bsky.app)2026-02-09T20:21:49.929Z